Event-Driven Federated Subscriptions (EDFS)

Kafka

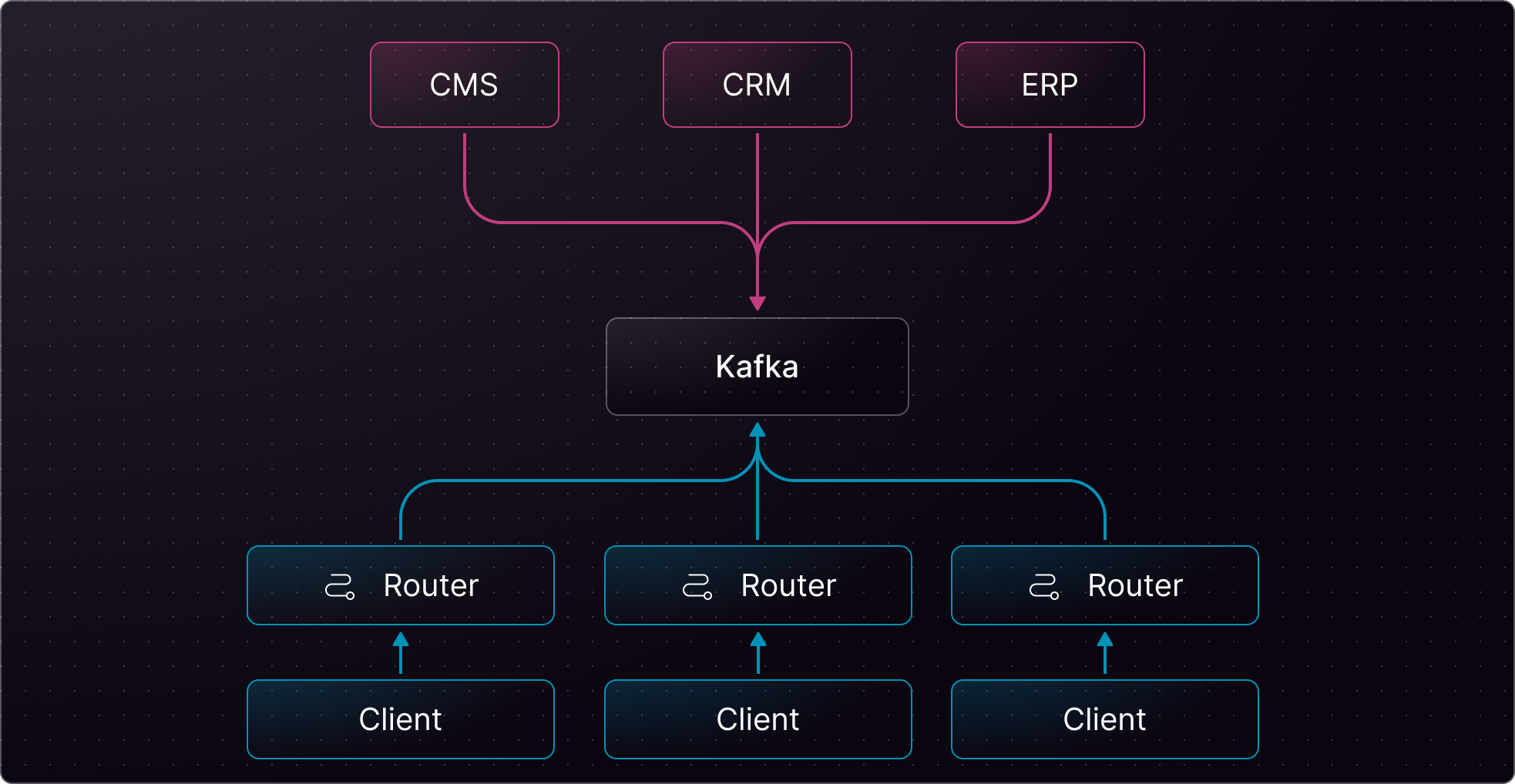

Kafka with EDFS

Minimum requirements

| Package | Minimum version |

|---|---|

| controlplane | 0.88.3 |

| router | 0.88.0 |

| wgc | 0.55.0 |

Full schema example

Here is a comprehensive example of how to use Kafka with EDFS. This guide covers publish, subscribe, and the filter directive. All examples can be modified to suit your specific needs. The schema directives andedfs__* types belong to the EDFS schema contract and must not be modified.

Router configuration

Based on the example above, you will need a compatible router configuration.Example Query

In the example query below, one or more subgraphs have been implemented alongside the Event-Driven Graph to resolve any other fields defined onEmployee, e.g., tag and details.surname.

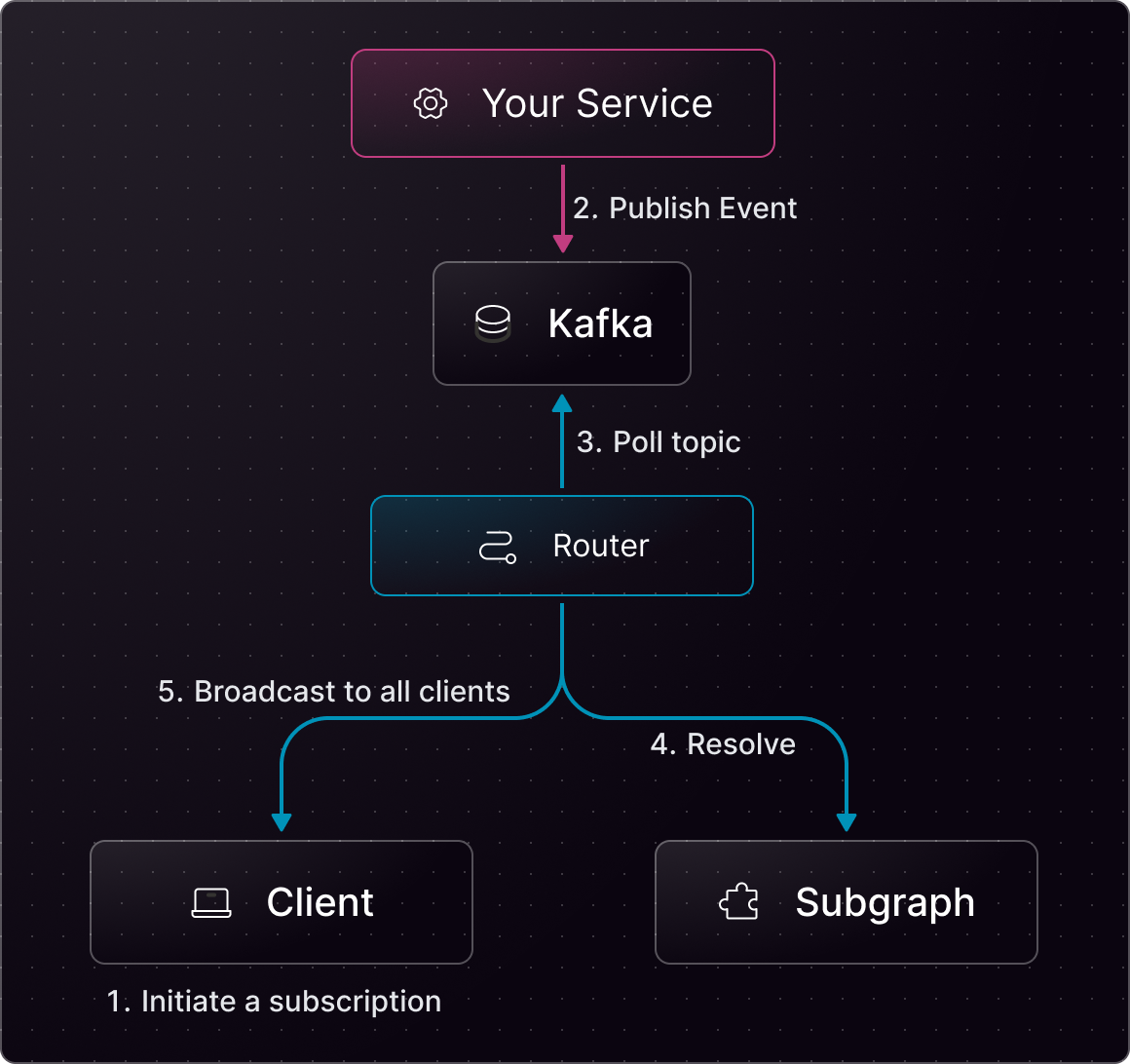

System diagram